pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", revision="fp16", torch_dtype=torch.float16).to("cuda")3 - Generative models

In our session about typical machine learning applications, we saw a bunch of prototypical tasks on various fields. A prominent example was generating images from a given description we wrote. This is possible thanks to the latest advances in generative models: stable diffusion, the current state-of-the-art text to image model.

In this homework, we will download a pre-trained stable diffusion model and we will have a bit of fun with it! However, we will not dive into the details or inner workings of stable diffusion, as they are far beyond the level we have covered in the course so far. The goal of this homework is to become familiar with the process of downloading a pre-trained model, follow a tutorial and troubleshoot any potential issues we may encounter (send your questions by email to Borja). All while having some fun!

The first part of the homework will focus on becoming familiar with the library that we will use to perform the tasks. To do so, we will adapt part of the tutorial in the fastai course by Pedro Cuenca, Patrick von Platen, Suraj Patil and Jeremy Howard, which uses the 🤗 Hugging Face 🧨 Diffusers library to download and use diffusion models.

Then, we describe the tasks to conduct to write your report, which are related to the introductory part.

0 - Introduction to use stable diffusion

In order to do the tasks in this homework, we first need to learn how to use the models.

To run stable diffusion on your computer you have to accept the model license. It’s an open CreativeML OpenRail-M license that claims no rights on the outputs you generate and prohibits you from deliberately producing illegal or harmful content. The model card provides more details. If you do accept the license, you need to be a registered user in 🤗 Hugging Face Hub and use an access token for the code to work. You have two options to provide your access token:

- Use the

huggingface-cli logincommand-line tool in your terminal and paste your token when prompted. It will be saved in a file in your computer. - Or use

notebook_login()in a notebook, which does the same thing.

Stable Diffusion Pipeline

StableDiffusionPipeline is an end-to-end diffusion inference pipeline that allows us to generate images with just a few lines of code. Many Hugging Face libraries (along with others like scikit-learn) use the concept of a “pipeline” to indicate a sequence of steps that achieve a task when combined.

We use from_pretrained to create the pipeline and download the pretrained weights. We indicate that we want to use the fp16 (half-precision) version of the weights, and we tell diffusers to expect the weights in that format. This allows us to perform much faster inference with almost no discernible difference in quality. The string passed to from_pretrained in this case (CompVis/stable-diffusion-v1-4) is the repo id of a pretrained pipeline hosted on Hugging Face Hub; it can also be a path to a directory containing pipeline weights. The weights for all the models in the pipeline will be downloaded and cached the first time you run this cell.

Let’s create our pipeline!

If your GPU is not big enough to use pipe, run pipe.enable_attention_slicing() in the cell right below.

As described in the docs: “When this option is enabled, the attention module will split the input tensor in slices, to compute attention in several steps. This is useful to save some memory in exchange for a small speed decrease.”

#pipe.enable_attention_slicing()Now that we have a working pipeline, let’s generate some images. As we saw in class, we need to provide this model with a description of the image that we want to generate.

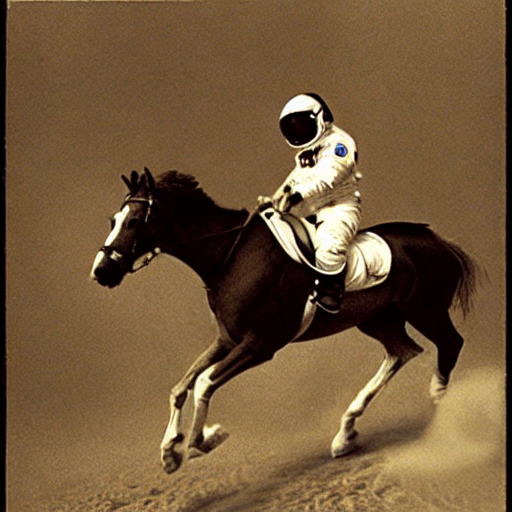

prompt = "a photograph of an astronaut riding a horse"Code

images = pipe(prompt).images

images[0]

These models are stochastic, so every time we execute the code with the same prompt, we will obtain a different image (try executing the cell above again!). We can fix the result by providing a manual seed for the random number genertor in pytorch with torch.manual_seed(), as we do below. This allows us to consistently see the effect of the parameters on the output.

Code

torch.manual_seed(1024)

pipe(prompt).images[0]

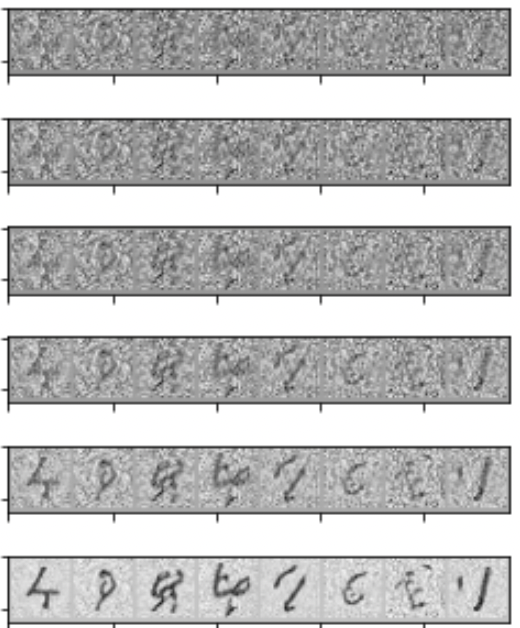

Running the pipeline shows a progress bar with a certain number of steps. This is because Stable Diffusion is based on a progressive denoising algorithm that is able to create convincing images from pure random noise. Models in this family are known as diffusion models. Here, we show an example of the process starting from random noise at top, to progressively improved images towards the bottom, of a model that creates handwritten digits.

We can change the number of denoising steps in a trade-off between faster predictions and image quality. Let’s see what happens when we try to generate the image from above with only 3 and 16 steps.

Code

torch.manual_seed(1024)

pipe(prompt, num_inference_steps=3).images[0]

Code

torch.manual_seed(1024)

pipe(prompt, num_inference_steps=16).images[0]

Classifier-Free Guidance

Classifier-Free Guidance is a method to increase the adherence of the output to the conditioning signal we used (the text).

Roughly speaking, the larger the guidance the more the model tries to represent the text prompt. However, large values tend to produce less diversity. The default is 7.5, which represents a good compromise between variety and fidelity. This blog post goes into deeper details on how it works.

We can generate multiple images for the same prompt by simply passing a list of prompts instead of a string.

num_rows, num_cols = 4, 4

prompts = [prompt] * num_cols

guidances = [1.1, 3, 7, 14]images = concat(pipe(prompts, guidance_scale=g).images for g in guidances)Code

image_grid(images, rows=num_rows, cols=num_cols)

Negative prompts

Negative prompting refers to the use a second prompt to increase the difference between generations of the original prompt and the second one.

prompt = "Labrador in the style of Vermeer"Code

torch.manual_seed(1000)

pipe(prompt).images[0]

neg_prompt = "blue"Code

torch.manual_seed(1000)

pipe(prompt, negative_prompt=neg_prompt).images[0]

With the negative prompt, we move more towards the direction of the positive one reducing the importance of the negative prompt in our composition. Here, we see how the blue scarf has disappeared from the image.

Image to Image

Even though Stable Diffusion was trained to generate images, and optionally drive the generation using text conditioning, we can use the raw image diffusion process for other tasks.

For example, instead of starting from pure noise, we can start from an image an add a certain amount of noise to it. This way, we skip the initial steps of the denoising process and pretend our noisy image is what the algorithm came up with from the raw noise. Then we continue the diffusion process from that state as usual. This usually preserves the composition, although the details may change significantly. This process works wonders with sketches!

We can do these operations with a special image to image pipeline: StableDiffusionImg2ImgPipeline. This is the source code for its __call__ method, which takes an initial image, adds some noise to it and runs the diffusion process from there.

pipe = StableDiffusionImg2ImgPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

).to("cuda")As an example, we can use the sketch created by VigilanteRogue81.

Code

p = FastDownload().download('https://s3.amazonaws.com/moonup/production/uploads/1664665907257-noauth.png')

init_image = Image.open(p).convert("RGB")

init_image

Now let’s create some images starting from the sketch.

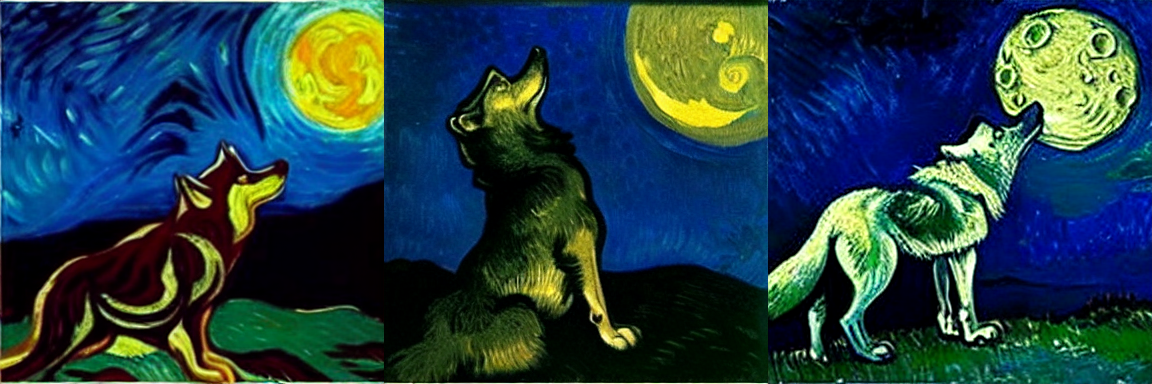

prompt = "Wolf howling at the moon, photorealistic 4K"Code

torch.manual_seed(1000)

images = pipe(prompt=prompt, num_images_per_prompt=3, image=init_image, strength=0.8, num_inference_steps=50).images

image_grid(images, rows=1, cols=3)

When we get a composition we like we can use it as the next seed for another prompt and further change the results. For example, let’s take the third image above and try to use it to generate something in the style of Van Gogh.

prompt = "Oil painting of wolf howling at the moon by Van Gogh"

init_image = images[2]Code

torch.manual_seed(1000)

images = pipe(prompt=prompt, num_images_per_prompt=3, init_image=init_image, strength=1, num_inference_steps=70).images

image_grid(images, rows=1, cols=3)

Creative people use different tools in a process of iterative refinement to come up with the ideas they have in mind. Here’s a list with some suggestions to get started.

1 - Stable diffusion

Now that we know how to use the model, we can proceed to experiment with it and study some of its most basic properties. Write a report detailing the results and conclusions drawn by completing the following tasks.

1.1 - Basic stable diffusion

We start by experimenting with the basic model. Come up with a prompt and generate various images for it (have some fun!). Show three representative images and comment on the general behaviour of the model and trends that you may observe. For instance, comment on whether it can consistently account for all the aspects in your prompt or whether it struggles to include some of those. Do all images have the same composition or do you observe significant changes in the layouts?

1.2 - Messing with the inference process

Experiment with the inference process. Define a prompt (could be the same as before) and fix a random seed with torch.manual_seed, as we show in the introductory part to always obtain the same outcome for the same prompt. Generate images with a different number of inference steps: 3, 15, 50, and as much as you can go in a reasonable time (higher than 60). Do so by appropiately setting num_inference_steps. Show the resulting images and describe what you observe qualitatively. In what sense do the images improve as we increase the number of steps?

In the astronaut riding a horse above, we see that with very few steps the model cannot generate a coherent image. Increasing the number of steps, the image starts to shape up according to our prompt but it struggles with some basic physical properties, such as the horse legs (it has only three and one of them has a ghost hoof). If we increase the number of steps further, these errors disappear and we obtain the first image.

2 - Advanced parameters

Now that we have a qualitative understanding of stable diffusion. Let’s play with some more advanced aspects. For now, you do not need to fully understand them. If you are interested, we can dive deeper in the last lesson of our course.

2.1 - Classifier-free guidance

In the introductory part above, we have seen how to tune our method’s adherance to our description. Define a prompt and a random seed. Generate images for four guidance values 1.1, 3.5, 7 and 14. Comment on the general behaviour.

2.2 - Negative prompts

Repeat the exact same process from 2.1 but, this time, include an appropiate negative prompt for our image generation (keep the original prompt and seed). How does the guidance impact the effect of the negative prompt?

Use the results from the best images to define an appropiate negative prompt.

3 - Image to image

Choose one of the following image to image tasks:

(a) Starting from a sketch

Draw a sketch (e.g. in paint), save it in png format and use it to generate a few cool images. Show the sketch and a few representative examples of the resulting images. Comment on the model’s behaviour, which parts of the sketch are preserved or discarded (composition? colors?) and what they have become.

(b) Starting from an image

Generate an image with the diffusion model and use it as starting point to generate new images. Show the initial image and a few representative examples of the resulting ones. Comment on the model’s behaviour, which parts of the image have been preserved (composition? colors?) and what they have become.